Meta says its ultimate goal with its VR hardware is to make a comfortable, compact headset with visual finality that’s ‘indistinguishable from reality’. Today the company revealed its latest VR headset prototypes which it says represent steps toward that goal.

Meta has made it no secret that it’s dumping tens of billions of dollars in its XR efforts, much of which is going to long-term R&D through its Reality Labs Research division. Apparently in an effort to shine a bit of light onto what that money is actually accomplishing, the company invited a group of press to sit down for a look at its latest accomplishments in VR hardware R&D.

Reaching the Bar

To start, Meta CEO Mark Zuckerberg spoke alongside Reality Labs Chief Scientist Michael Abrash to explain that the company’s ultimate goal is to build VR hardware that meets all the visual requirements to be accepted as “real” by your visual system.

VR headsets today are impressively immersive, but there’s still no question that what you’re looking at is, well… virtual.

Inside of Meta’s Reality Labs Research division, the company uses the term ‘visual Turing Test’ to represent the bar that needs to be met to convince your visual system that what’s inside the headset is actually real. The concept is borrowed from a similar concept which denotes the point at which a human can tell the difference between another human and an artificial intelligence.

For a headset to completely convince your visual system that what’s inside the headset is actually real, Meta says you need a headset that can pass that “visual Turing Test.”

Four Challenges

Zuckerberg and Abrash outlined what they see as four key visual challenges that VR headsets need to solve before the visual Turing Test can be passed: varifocal, distortion, retina resolution, and HDR.

Briefly, here’s what those mean:

- Varifocal: the ability to focus on arbitrary depths of the virtual scene, with both essential focus functions of the eyes (vergence and accommodation)

- Distortion: lenses inherently distort the light that passes through them, often creating artifacts like color separation and pupil swim that make the existence of the lens obvious.

- Retina resolution: having enough resolution in the display to meet or exceed the resolving power of the human eye, such that there’s no evidence of underlying pixels

- HDR: also known as high dynamic range, which describes the range of darkness and brightness that we experience in the real world (which almost no display today can properly emulate).

The Display Systems Research team at Reality Labs has built prototypes that function as proof-of-concepts for potential solutions to these challenges.

Varifocal

To address varifocal, the team developed a series of prototypes which it called ‘Half Dome’. In that series the company first explored a varifocal design which used a mechanically moving display to change the distance between the display and the lens, thus changing the focal depth of the image. Later the team moved to a solid-state electronic system which resulted in varifocal optics that were significantly more compact, reliable, and silent. We’ve covered the Half Dome prototypes in greater detail here if you want to know more.

Virtual Reality… For Lenses

As for distortion, Abrash explained that experimenting with lens designs and distortion-correction algorithms that are specific to those lens designs is a cumbersome process. Novel lenses can’t be made quickly, he said, and once they are made they still need to be carefully integrated into a headset.

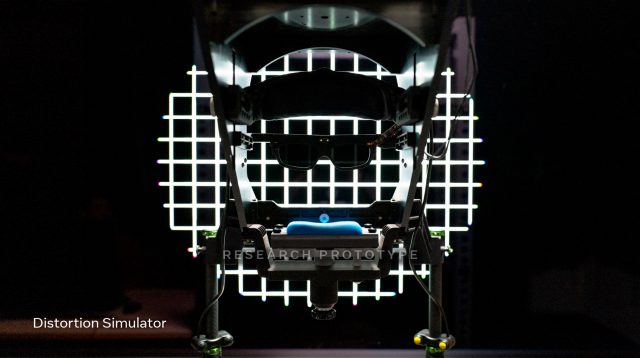

To allow the Display Systems Research team to work more quickly on the issue, the team built a ‘distortion simulator’, which actually emulates a VR headset using a 3DTV, and simulates lenses (and their corresponding distortion-correction algorithms) in-software.

Doing so has allowed the team to iterate on the problem more quickly, wherein the key challenge is to dynamically correct lens distortions as the eye moves, rather than merely correcting for what is seen when the eye is looking in the immediate center of the lens.

Retina Resolution

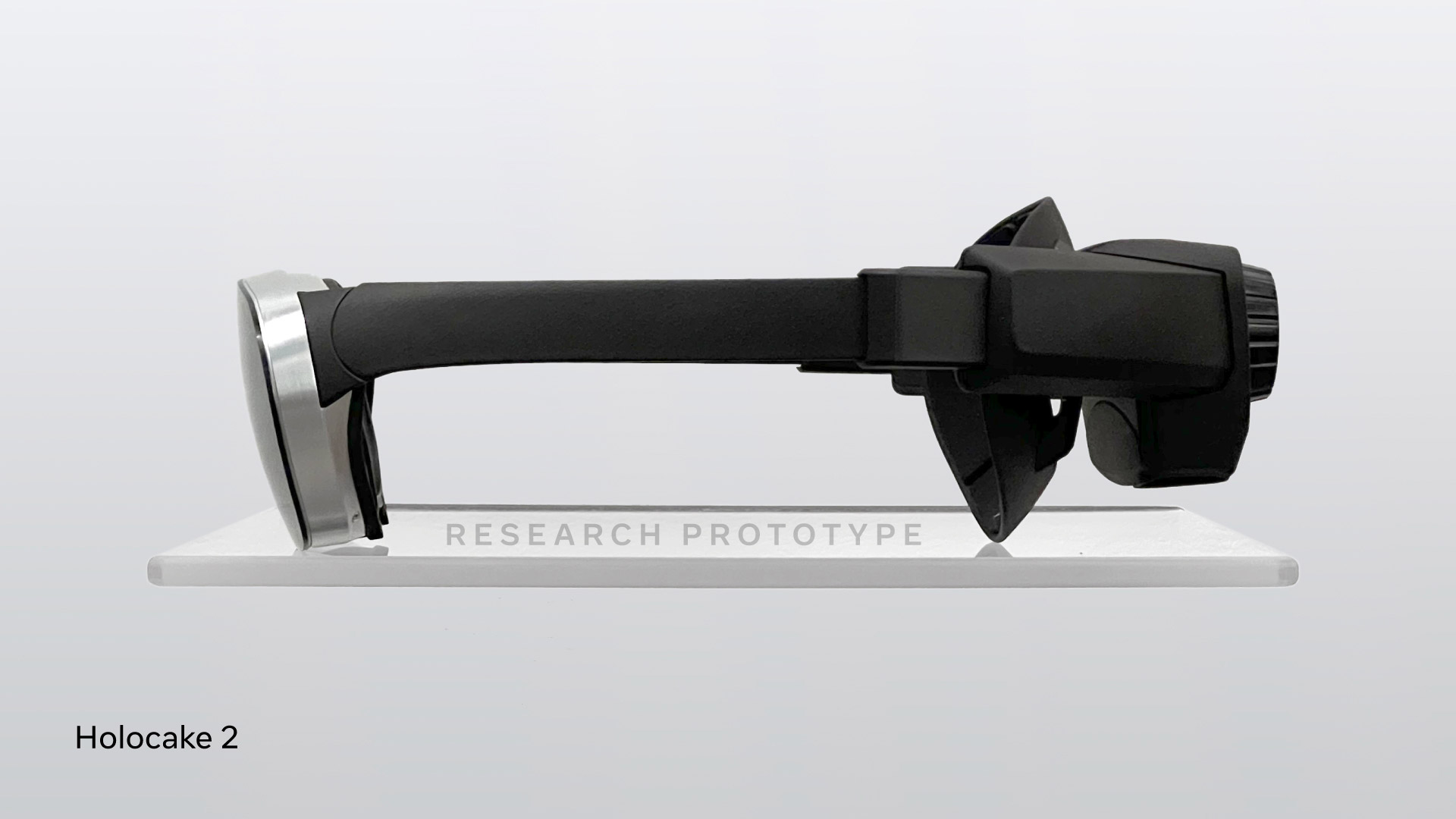

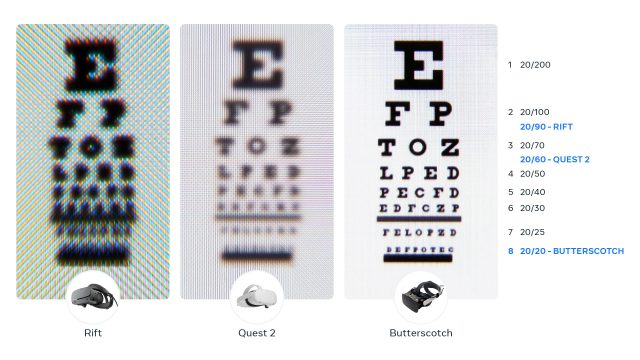

On the retina resolution front, Meta revealed a previously unseen headset prototype called Butterscotch, which the company says achieves a retina resolution of 60 pixels per degree, allowing for 20/20 vision. To do so, they used extremely pixel-dense displays and reduced the field-of-view—in order to concentrate the pixels over a smaller area—to about half the size of Quest 2. The company says it also developed a “hybrid lens” that would “fully resolve” the increased resolution, and it shared through-the-lens comparisons between the original Rift, Quest 2, and the Butterscotch prototype.

While there are already headsets out there today that offer retina resolution—like Varjo’s VR-3 headset—only a small area in the middle of the view (27° × 27°) hits the 60 PPD mark… anything outside of that area drops to 30 PPD or lower. Ostensibly Meta’s Butterscotch prototype has 60 PPD across its entirely of the field-of-view, though the company didn’t explain to what extent resolution is reduced toward the edges of the lens.

Continue on Page 2: High Dynamic Range, Downsizing »

,