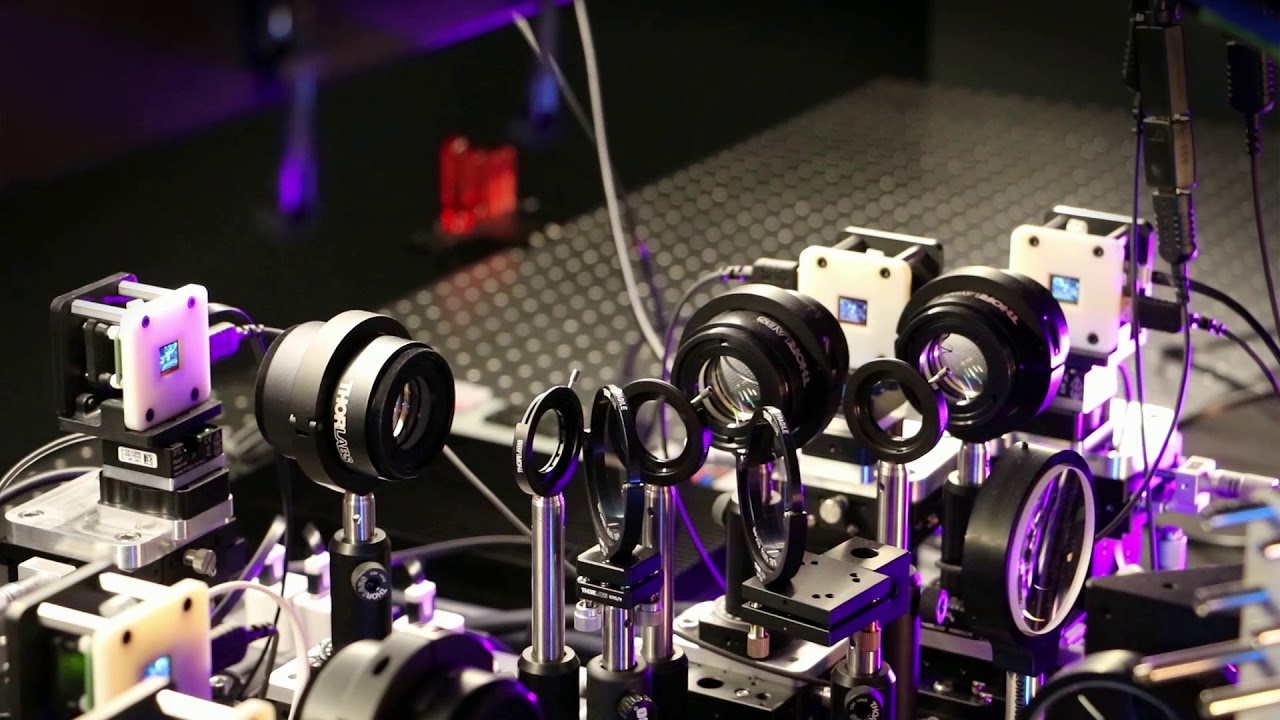

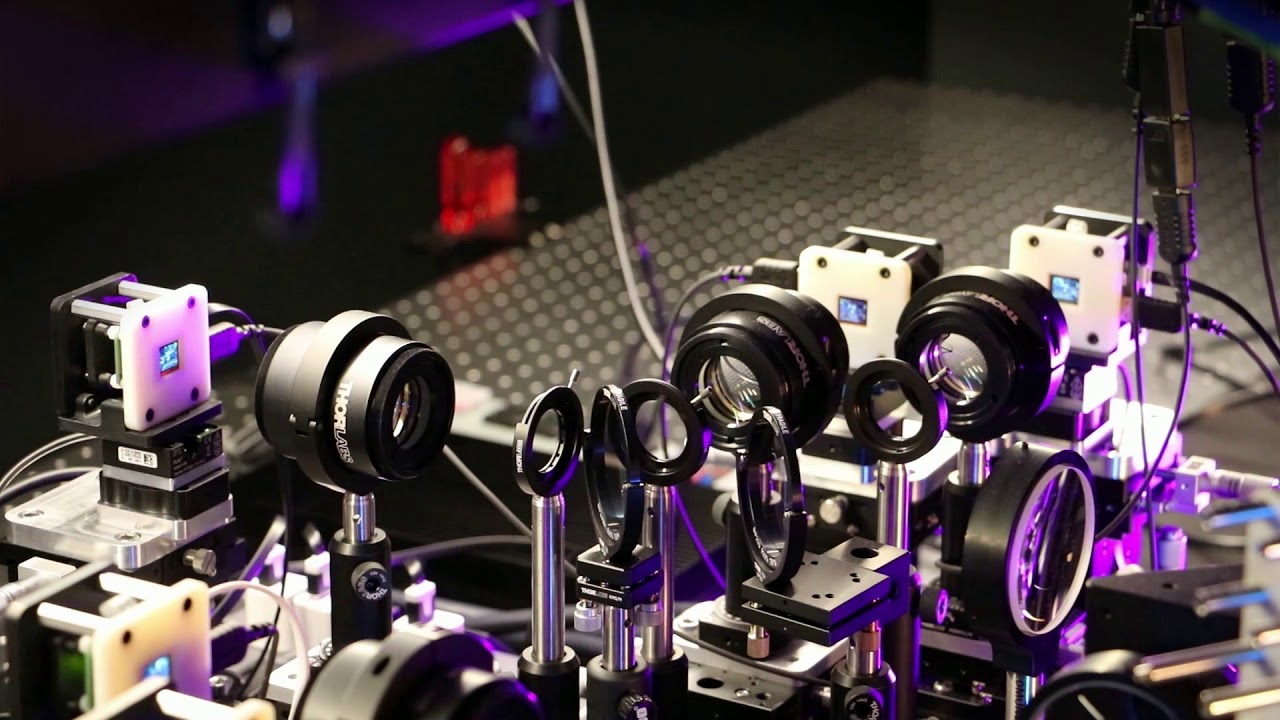

Oculus Research, the company’s R&D division, recently published a paper that goes deeper into their eye tracking-assisted, multi-focal display tech, detailing the creation of something they dub a “perceptual” testbed.

Current consumer VR headsets universally present the user with a single, fixed-focus display plane, something that creates what’s known in the field as the vergence-accommodation conflict; the user simply doesn’t have the ability to focus correctly due to the display’s inability to provide realistic focus cues, making for a less realistic and less comfortable viewing experience. You can read more about the vergence-accomodation conflict in our article on Oculus Research’s experimental focal surface display.

By display plane, we mean one slice in depth of the rendered scene. With accurate eye-tracking and the creation of several independent display planes, each taken from varying areas of the fore and background, you can mimic retinal blur. Oculus Research’s perceptual testbed goes a few steps further however.

The goal of the project, Oculus researchers say, is to provide a testbed in hopes of better understanding the computational demand and hardware accuracy of such a system, one that not only tracks the user’s gaze, but adjusts the multi-planar scene to correct for eye and head movement—something previous multifocal displays simply don’t account for.

“We wanted to improve the capability of accurately measuring the accommodation response and presenting the highest quality images possible,” says Research Scientist Kevin MacKenzie.

“It’s amazing to think that after many decades of research by very talented vision scientists, the question about how the eye’s focusing system is driven—and what stimulus it uses to optimize focus—is still not well delineated,” MacKenzie explains. “The most exciting part of the system build is in the number of experimental questions we can answer with it—questions that could only be answered with this level of integration between stimulus presentation and oculomotor measurement.”

The team maintains their method of display is compatible with current GPU implementations, and achieves a “three-orders-of magnitude speedup over previous work.” This, they contend, will help pave the way to establish practical eye-tracking and rendering requirements for multifocal displays moving forward.

“The ability to prototype new hardware and software as well as measure perception and physiological responses of the viewer has opened not only new opportunities for product development, but also for advancing basic vision science,” adds Research Scientist Marina Zannoli. “This platform should help us better understand the role of optical blur in depth perception as well as uncover the underlying mechanisms that drive convergence and accommodation. These two areas of research will have a direct impact on our ability to create comfortable and immersive experiences in VR.”