DeepFocus is Facebook’s AI-driven renderer that’s said to produce natural looking blur in real-time, something that’s poised to go hand-in-hand with the varifocal displays of tomorrow. Today, Facebook announced that DeepFocus is going open source; while the company’s wide field of view (FOV) prototype ‘Half Dome’ may be proprietary, their deep learning tool will be “hardware agnostic.”

When you hold up your hand in front of you, your eyes naturally converge and accommodate, bringing your hand into focus. The experience of this isn’t the same in the VR headsets of today however since the light is coming from a fixed source, sending your eyes into overdrive to resolve near-field images. This is where varifocal displays and eye tracking comes in, as the once fixed focal length becomes variable to match your eyes depending on where you’re looking at any given moment.

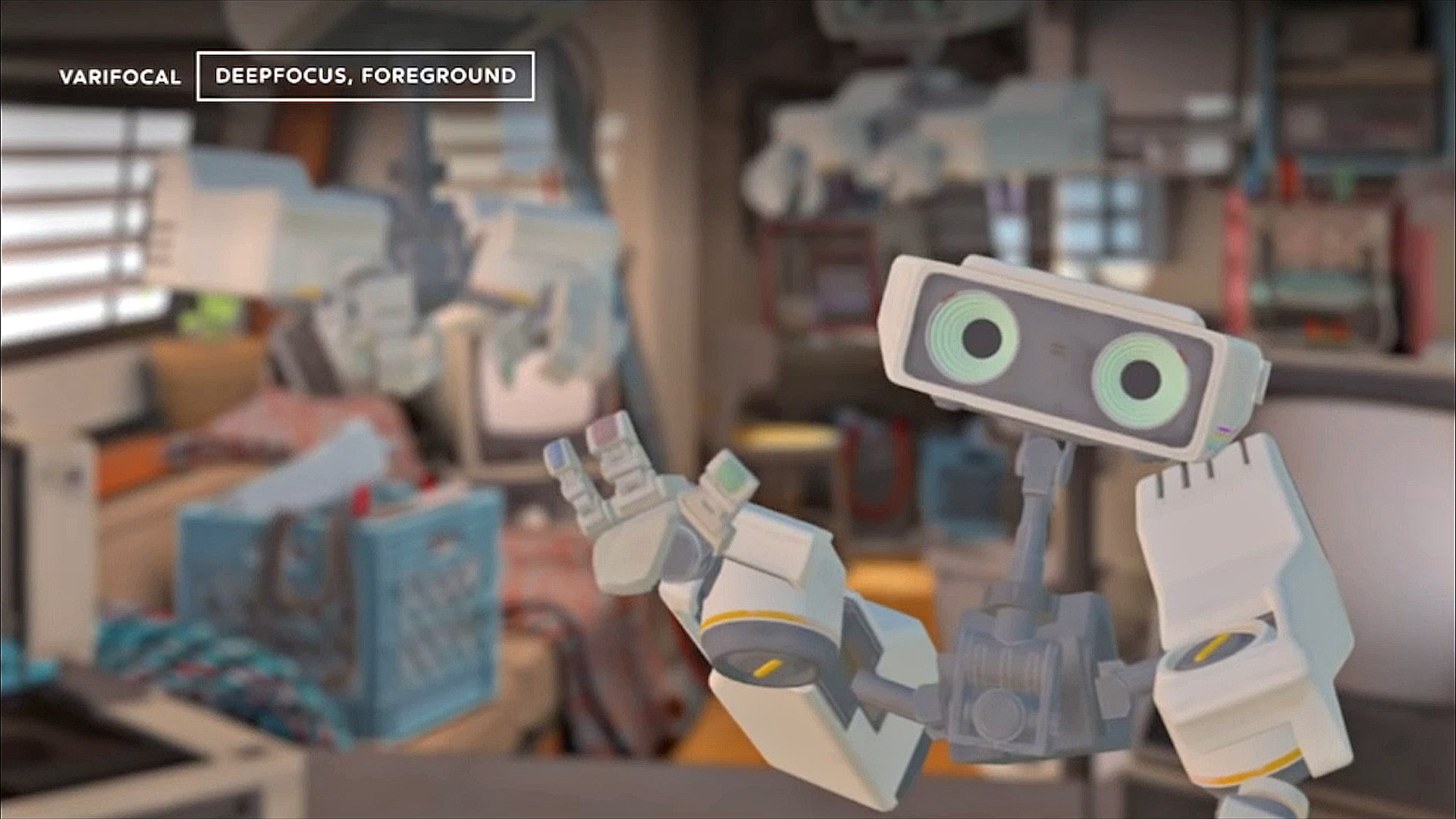

Essentially it will let you focus on objects regardless of their distance from you, making the overall experience more comfortable and immersive. But the missing piece of the puzzle here is the ability for the headset to also replicate natural-looking defocus blur too, something that happens when you focus on your hand and the background goes fuzzy. Enter DeepFocus.

In a research paper presented at SIGGRAPH Asia 2018, the company says DeepFocus is inspired by “increasing evidence of the important role retinal defocus blur plays in driving accommodative responses, as well as the perception of depth and physical realism.”

Unlike more traditional AI systems used for deep learning-based image analysis, DeepFocus is said to processes visuals while maintaining the ultrasharp image resolutions necessary for high-quality VR.

That means not only will things look more realistic in varifocal VR headsets and even AR headsets with light-field displays, they’ll also mitigate eyestrain associated with vergence-accommodation conflict.

“This network is demonstrated to accurately synthesize defocus blur, focal stacks, multilayer decompositions, and multiview imagery using only commonly available RGB-D images, enabling real-time, near-correct depictions of retinal blur with a broad set of accommodation-supporting HMDs,” Facebook researchers say.

Facebook will be publishing both the source and neural net training data today “for engineers developing new VR systems, vision scientists, and other researchers studying perception,” the company says in a blog post.

Introducing DeepFocus at Oculus Connect 5, Facebook Reality Lab’s chief scientist Michael Abrash said that while Half Dome and DeepFocus are essentially “just the start for optics and displays, which is the poster child for how progress is accelerating.”