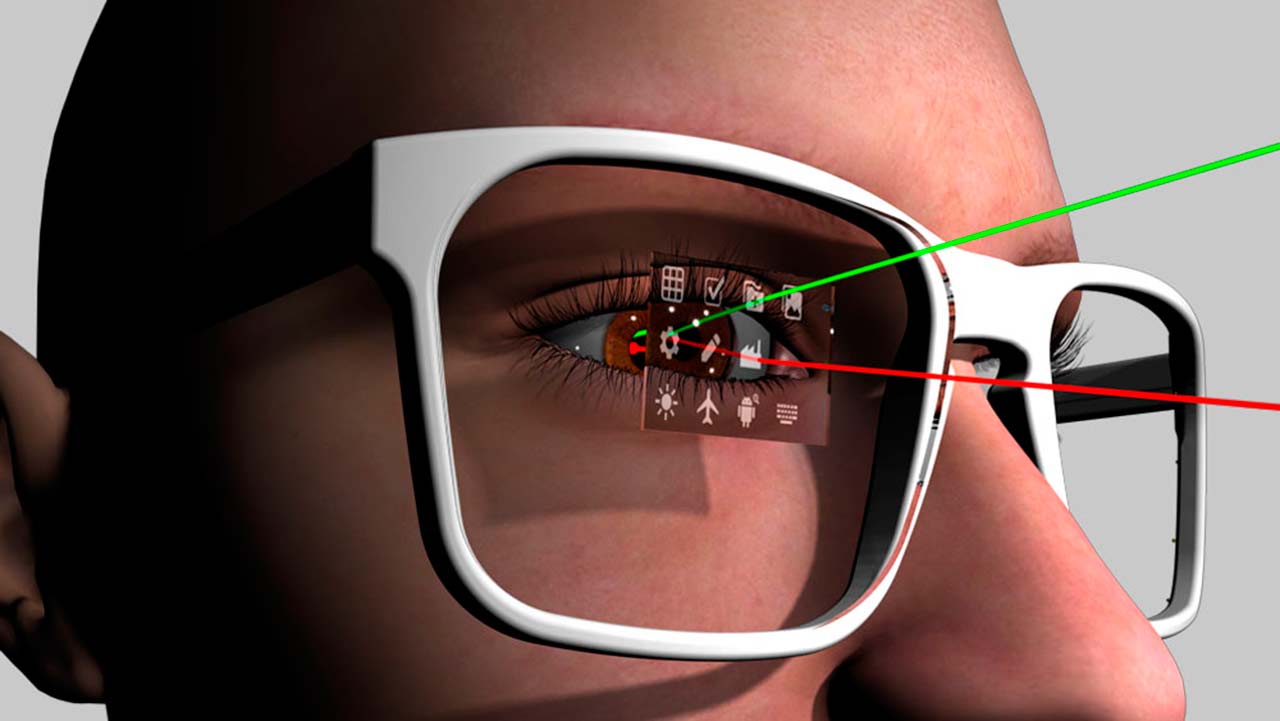

I had a chance to do a demo of Eyefluence, which has created a new model for eye interactions within virtual and augmented reality apps. Rather than using the normal eye interaction paradigm of dwelling focus or explicitly winking to select, Eyefluence has developed a more comfortable way to trigger discrete actions with a selection system that’s triggered through natural eye movements. At times it felt magical to feel like the technology was almost reading my mind, while other times it was clear that this is still an early iteration of an emerging visual language that’s is still being developed and defined.

I had a chance to do a demo of Eyefluence, which has created a new model for eye interactions within virtual and augmented reality apps. Rather than using the normal eye interaction paradigm of dwelling focus or explicitly winking to select, Eyefluence has developed a more comfortable way to trigger discrete actions with a selection system that’s triggered through natural eye movements. At times it felt magical to feel like the technology was almost reading my mind, while other times it was clear that this is still an early iteration of an emerging visual language that’s is still being developed and defined.

I had a chance to talk with Jim Marggraff, the CEO and founder of Eyefluence, at TechCrunch Disrupt last week where we talked about the strengths and weaknesses of their eye interaction model, as well as some of the applications that were prototyped within the demo.

LISTEN TO THE VOICES OF VR PODCAST

Eyefluence’s overarching principle is to let the eyes do what the eyes are going to do, and Jim claims that extended use of their system doesn’t result in any measurable eye fatigue. While Jim concedes that most future VR and AR interactions will be a multimodal combination of using our hands, head, eyes, and voice, Eyefluence wants to push the limits of what’s possible by using the eyes alone.

After seeing their demo, I became convinced that there is a place for using eye interactions within VR and AR 3D user interfaces. While the eyes are able to accomplish some amazing things on their own, I don’t think that most people are not going to want to only use their eyes. In some applications like in mobile VR or in augmented reality apps, then I could see how Eyefluence’s eye interaction would work well as the primary or sole interaction mechanism. But it’s much more likely that eye interactions will be used to supplement and accelerate selection tasks while also using physical buttons on motion controllers and voice input in immersive applications.

Here’s an abbreviated version of the demo that I saw with Jim presenting at Augmented World Expo 2016:

Support Voices of VR

Music: Fatality & Summer Trip