A team of researchers from NVIDIA Research and Stanford published a new paper demonstrating a pair of thin holographic VR glasses. The displays can show true holographic content, solving for the vergence-accommodation issue. Though the research prototypes demonstrating the principles were much smaller in field-of-view, the researchers claim it would be straightforward to achieve a 120° diagonal field-of-view.

Published ahead of this year’s upcoming SIGGRAPH 2023 conference, a team of researchers from NVIDIA Research and Stanford demonstrated a near-eye VR display that can be used to display flat images or holograms in a compact form-factor. The paper also explores the interconnected variables in the system that impact key display factors like field-of-view, eye-box, and eye-relief. Further, the researchers explore different algorithms for optimally rendering the image for the best visual quality.

Commercially available VR headsets haven’t improved in size much over the years largely because of an optical constraint. Most VR headsets use a single display and a simple lens. In order to focus the light from the display into your eye, the lens must be a certain distance from the display; any closer and the image will be out of focus.

Eliminating that gap between the lens and the display would unlock previously impossible form-factors for VR headsets; understandably there’s been a lot of R&D exploring how this can be done.

In NVIDIA-Stanford’s newly published paper, Holographic Glasses for Virtual Reality, the team shows that it built a holographic display using a spatial light modulator combined with a waveguide rather than a traditional lens.

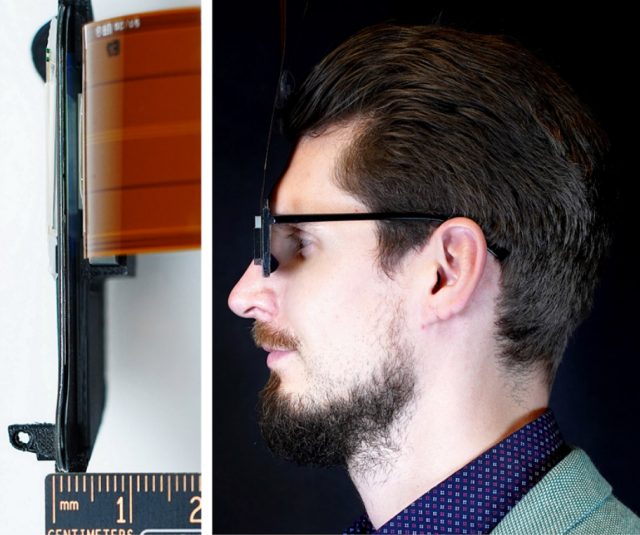

The team built both a large benchtop model—to demonstrate core methods and experiment with different algorithms for rending the image for optimal display quality—and a compact wearable model to demonstrate the form-factor. The images you see of the compact glasses-like form-factor don’t include the electronics to drive the display (as the size of that part of the system is out of scope for the research).

You may recall a little while back that Meta Reality Labs published its own work on a compact glasses-size VR headset. Although that work involves holograms (to form the system’s lenses), it is not a ‘holographic display’, which means it doesn’t solve the vergence-accommodation issue that’s common in many VR displays.

On the other hand, the Nvidia-Stanford researchers write that their Holographic Glasses system is in fact a holographic display (thanks to the use of a spatial light modulator), which they tout as a unique advantage of their approach. However, the team also writes that it’s possible to display typical flat images on the display as well (which, like contemporary VR headsets, can converge for a stereoscopic view).

Not only that, but the Holographic Glasses project touts a mere 2.5mm thickness for the entire display, significantly thinner than the 9mm thickness of the Reality Labs project (which was already impressively thin!).

As with any good paper though, the Nvidia-Stanford team is quick to point out the limitations of their work.

For one, their wearable system has a tiny 22.8° diagonal field-of-view with an equally tiny 2.3mm eye-box. Both of which are way too small to be viable for a practical VR headset.

However, the researchers write that the limited field-of-view is largely due to their experimental combination of novel components that aren’t optimized to work together. Drastically expanding the field-of-view, they explain, is largely a matter of choosing complementary components.

“[…] the [system’s field-of-view] was mainly limited by the size of the available [spatial light modulator] and the focal length of the GP lens, both of which could be improved with different components. For example, the focal length can be halved without significantly increasing the total thickness by stacking two identical GP lenses and a circular polarizer [Moon et al. 2020]. With a 2-inch SLM and a 15mm focal length GP lens, we could achieve a monocular FOV of up to 120°”

As for the 2.3mm eye-box (the volume in which the rendered image can be seen), it’s way too small for practical use. However, the researchers write that they experimented with a straightforward way to expand it.

With the addition of eye-tracking, they show, the eye-box could be dynamically expanded up to 8mm by changing the angle of the light that’s sent into the waveguide. Granted, 8mm is still a very tight eye-box, and might be too small for practical use due to variations in eye-relief distance and how the glasses rest on the head, from one user to the next.

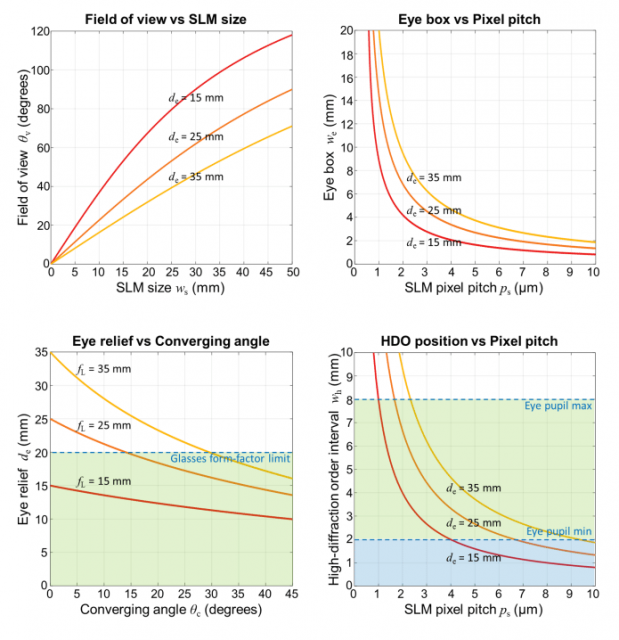

But, there’s variables in the system that can be adjusted to change key display factors, like the eye-box. Through their work, the researchers established the relationship between these variables, giving a clear look at what tradeoffs would need to be made to achieve different outcomes.

As they show, eye-box size is directly related to the pixel pitch (distance between pixels) of the spatial light modulator, while field-of-view is related to the overall size of the spatial light modulator. Limitations on eye-relief and converging angle are also shown, relative to a sub-20mm eye-relief (which the researchers consider the upper limit of a true ‘glasses’ form-factor).

An analysis of this “design trade space,” as they call it, was a key part of the paper.

“With our design and experimental prototypes, we hope to stimulate new research and engineering directions toward ultra-thin all-day-wearable VR displays with form-factors comparable to conventional eyeglasses,” they write.

The paper is credited to researchers Jonghyun Kim, Manu Gopakumar, Suyeon Choi, Yifan Peng, Ward Lopes, and Gordon Wetzstein.

,