Meta announced its released a new Acoustic Ray Tracing feature that will make it easier for developers to add more immersive audio to their VR games and apps.

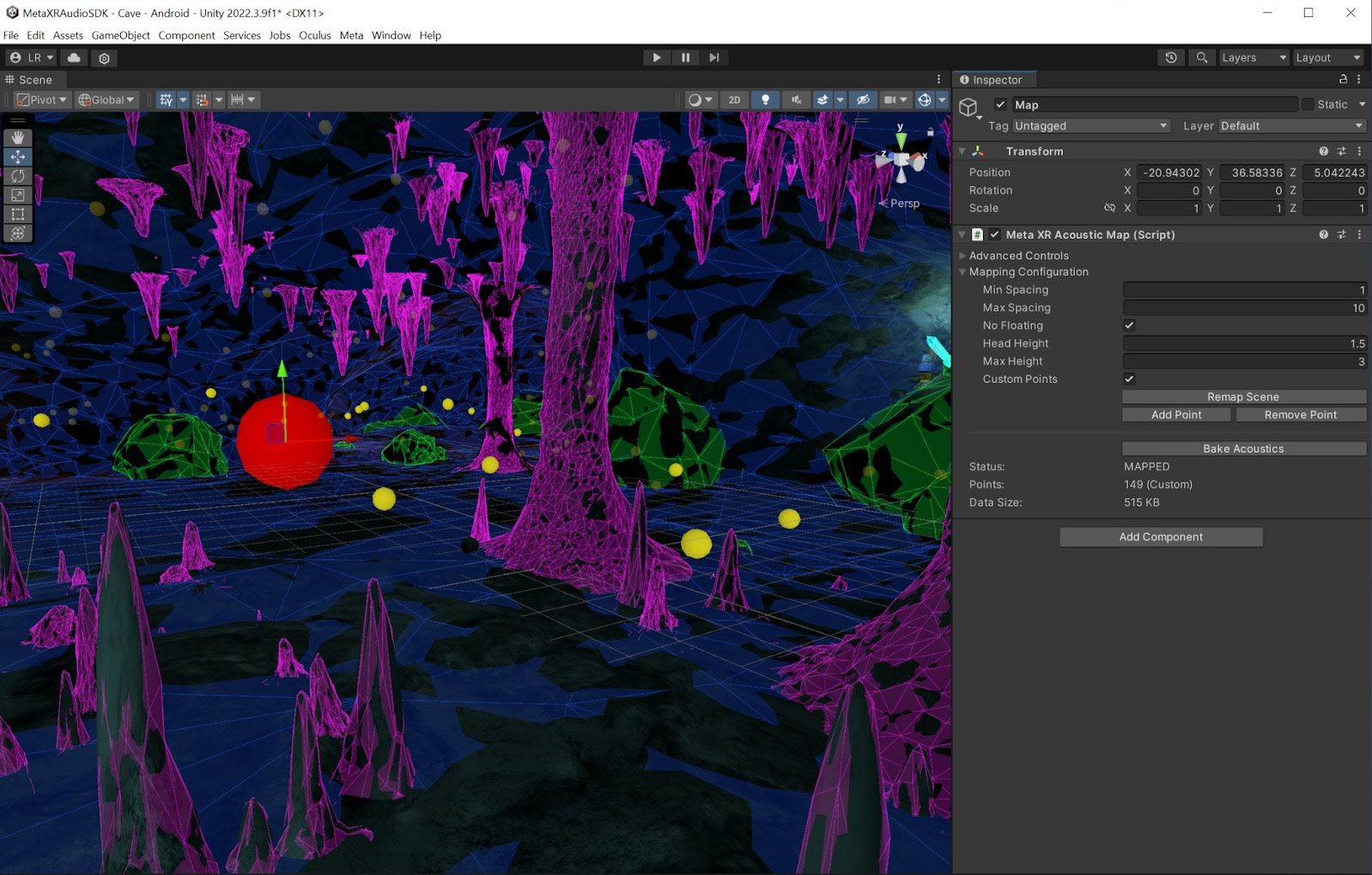

Released in the Audio SDK for Unity and Unreal, the new Acoustic Ray Tracing tech is designed to automate the complex process of simulating realistic acoustics which is traditionally achieved through labor-intensive, manual methods.

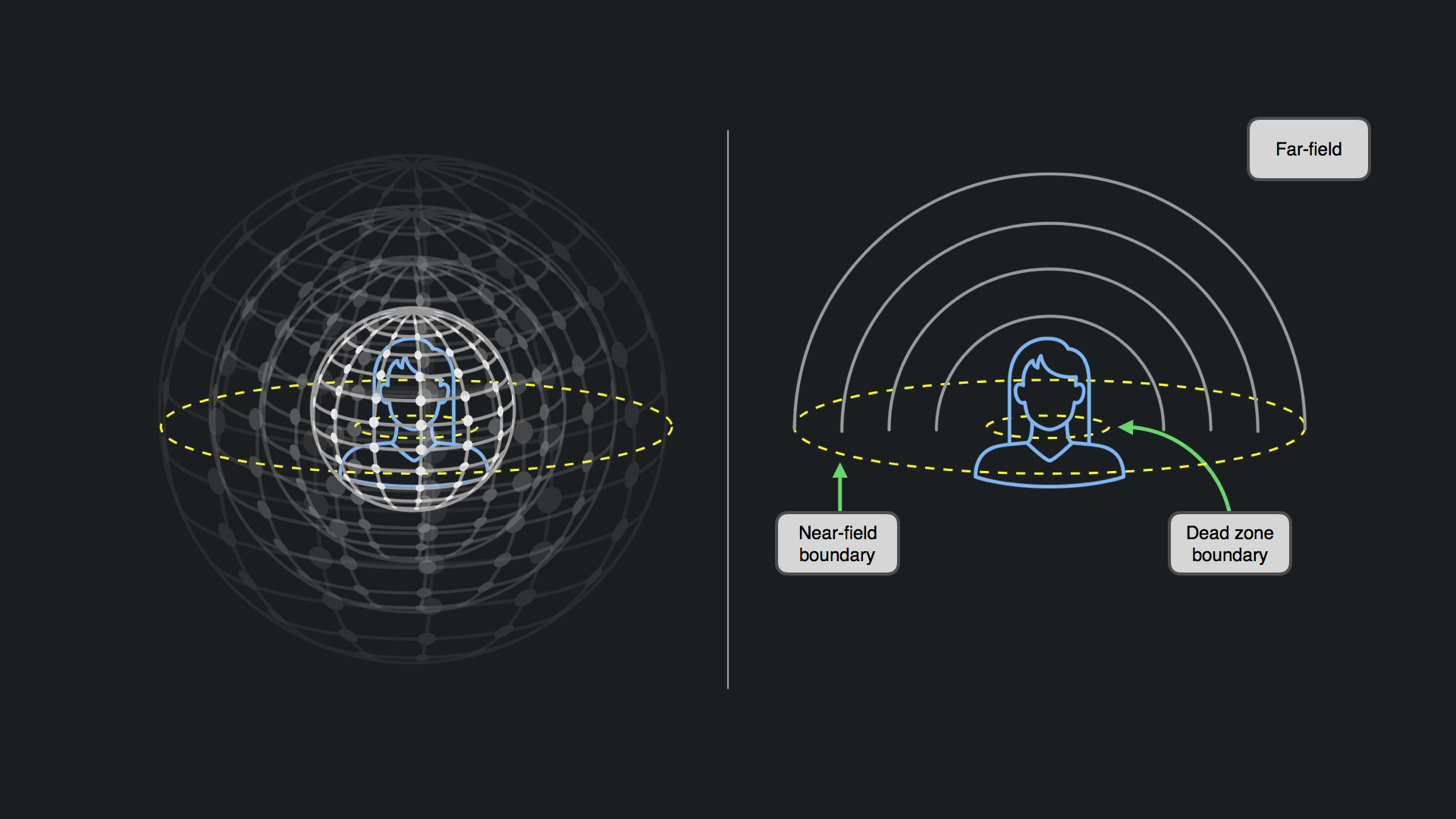

With Meta’s Acoustic Ray Tracing, the company says in a developer blog post it can simulate sound reflections, reverberations, and things like diffraction, occlusion, and obstruction—all critical to making spatial audio closer to the real thing.

The new audio feature, which Meta calls “a more natural audio experience” than its older Shoebox Room Acoustics model, also supports complex environments.

“Our acoustics features can handle arbitrarily complex geometry, ensuring that even the most intricate environments are accurately simulated,” Meta says. “Whether your VR scene is a winding cave, a bustling cityscape, or an intricate indoor environment, our technology can manage the complexity without compromising on performance.

The Acoustic Ray Tracing system is said to integrate with existing workflows, supporting popular middleware like FMOD and Wwise. Notably, Acoustic Ray Tracing is being used in the upcoming Quest exclusive Batman: Arkham Shadow, demonstrating its potential for creating immersive experiences.

“One of the standout benefits of our new acoustics features is their performance on mobile hardware. While other solutions in the market require powerful PCs due to their high performance cost, our SDK is optimized to run efficiently on mobile devices such as Quest headsets. This opens up new possibilities for high-quality audio simulation in mobile applications, making immersive audio more accessible than ever before,” the company says.

You can find out more about Meta’s Acoustic Ray Tracing here. You’ll also find documentation on Meta’s Audio SDK (Unity | Unreal) and Acoustic Ray Tracing (Unity | Unreal).

,